Topic Identification System in Python

In this article we discuss how to create Topic Identification System in Python This system can be used classify text into different categories or topics. This is popular application of machine learning in natural language processing (NLP).

Concept Identification System in Python

How to Build a Keyword Identification System in Python

Python Program for Lungs Cancer Detection using Random Forest

Step 1: Import Libraries

We will start by importing necessary libraries. We will be using pandas library for data handling scikit learn library for machine learning and nltk library for natural language processing.

import pandas as pd

from sklearn.feature_extraction.text import CountVectorizer

from sklearn.naive_bayes import MultinomialNB

from sklearn.metrics import accuracy_score

import nltk

from nltk.corpus import stopwords

from nltk.tokenize import word_tokenize

Step 2: Load Data

Next we will load data that we will be using train our topic identification system. In example we will be using a dataset of news articles from different categories. We will use pandas library to load dataset.

df = pd.read_csv('news_articles.csv')

Step 3: Data Preprocessing

Before we can use data to train our topic identification system we need to preprocess data. This involves cleaning data and converting it into a format that can be used by machine learning algorithms.

First we will remove any punctuation from text and convert all text to lowercase. We will also remove any stop words from the text which are common words that do not add much meaning to the text.

nltk.download('stopwords')

stop_words = set(stopwords.words('english'))

def clean_text(text):

text = text.lower()

text = ''.join(c for c in text if c not in punctuation)

text = ' '.join(word for word in text.split() if word not in stop_words)

return text

df['cleaned_text'] = df['text'].apply(clean_text)

Next we use Count Vectorizer from scikit learn to convert text into bag of words model. This converts text into matrix of word counts where each row represents document and each column represents a word.

vectorizer = CountVectorizer()

X = vectorizer.fit_transform(df['cleaned_text'])

Step 4: Train Model

Now that we have preprocessed data we can use it to train our topic identification model. In this example we will be using Multinomial Naive Bayes algorithm which popular algorithm for text classification.

y = df['category']

model = MultinomialNB()

model.fit(X, y)

Step 5: Test Model

Finally we will test model on some new data to see how well it performs. We will load a test dataset and preprocess it in same way as we did with training data. We will then use trained model to predict categories of test data.

test_df = pd.read_csv('test_articles.csv')

test_df['cleaned_text'] = test_df['text'].apply(clean_text)

X_test = vectorizer.transform(test_df['cleaned_text'])

y_test = test_df['category']

y_pred = model.predict(X_test)

accuracy = accuracy_score(y_test, y_pred)

print('Accuracy: ', accuracy)

The output will be the accuracy of the model on the test data.

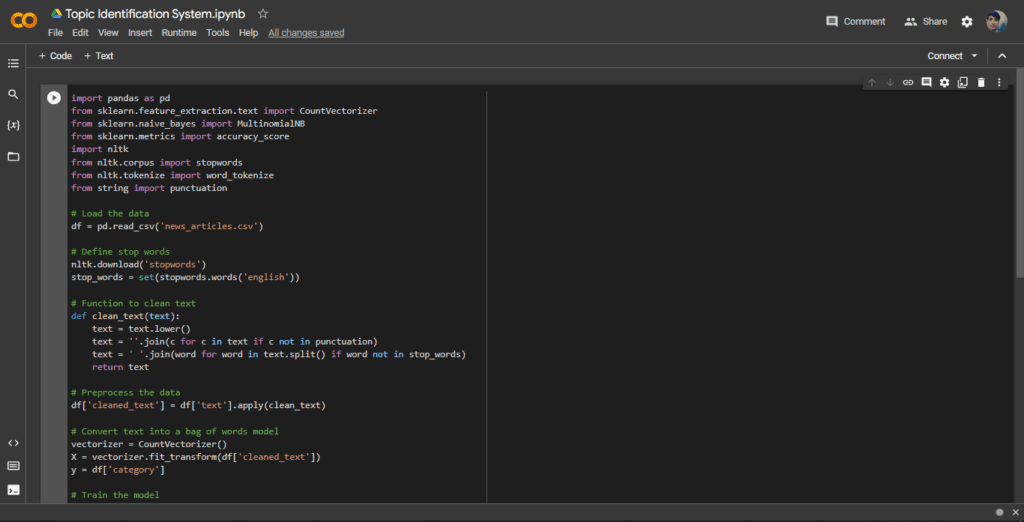

Complete Code Implementation using Colab

Here is complete code implementation for Topic Identification System in Python using Colab:

import pandas as pd

from sklearn.feature_extraction.text import CountVectorizer

from sklearn.naive_bayes import MultinomialNB

from sklearn.metrics import accuracy_score

import nltk

from nltk.corpus import stopwords

from nltk.tokenize import word_tokenize

from string import punctuation

# Load the data

df = pd.read_csv('news_articles.csv')

# Define stop words

nltk.download('stopwords')

stop_words = set(stopwords.words('english'))

# Function to clean text

def clean_text(text):

text = text.lower()

text = ''.join(c for c in text if c not in punctuation)

text = ' '.join(word for word in text.split() if word not in stop_words)

return text

# Preprocess the data

df['cleaned_text'] = df['text'].apply(clean_text)

# Convert text into a bag of words model

vectorizer = CountVectorizer()

X = vectorizer.fit_transform(df['cleaned_text'])

y = df['category']

# Train the model

model = MultinomialNB()

model.fit(X, y)

# Test the model

test_df = pd.read_csv('test_articles.csv')

test_df['cleaned_text'] = test_df['text'].apply(clean_text)

X_test = vectorizer.transform(test_df['cleaned_text'])

y_test = test_df['category']

y_pred = model.predict(X_test)

accuracy = accuracy_score(y_test, y_pred)

print('Accuracy: ', accuracy)

Here are some useful links for text classification datasets that you can use to train and test topic identification system using Python:

- 20 Newsgroups: http://qwone.com/~jason/20Newsgroups/

- Reuters-21578: https://archive.ics.uci.edu/ml/datasets/reuters-21578+text+categorization+collection

- IMDB Movie Reviews: https://ai.stanford.edu/~amaas/data/sentiment/

- AG News: https://www.di.unipi.it/~gulli/AG_corpus_of_news_articles.html

- BBC News Classification: http://mlg.ucd.ie/datasets/bbc.html

- Yelp Reviews: https://www.yelp.com/dataset/challenge

- Amazon Reviews: https://s3.amazonaws.com/amazon-reviews-pds/readme.html

These datasets contain text data and corresponding categories that you can use to train and test the topic identification system code provided earlier in this article. Additionally, you can also search for other datasets on Google or Kaggle that may be more suitable for your specific use case.